„Why did Apple spend $400M to acquire Shazam?”

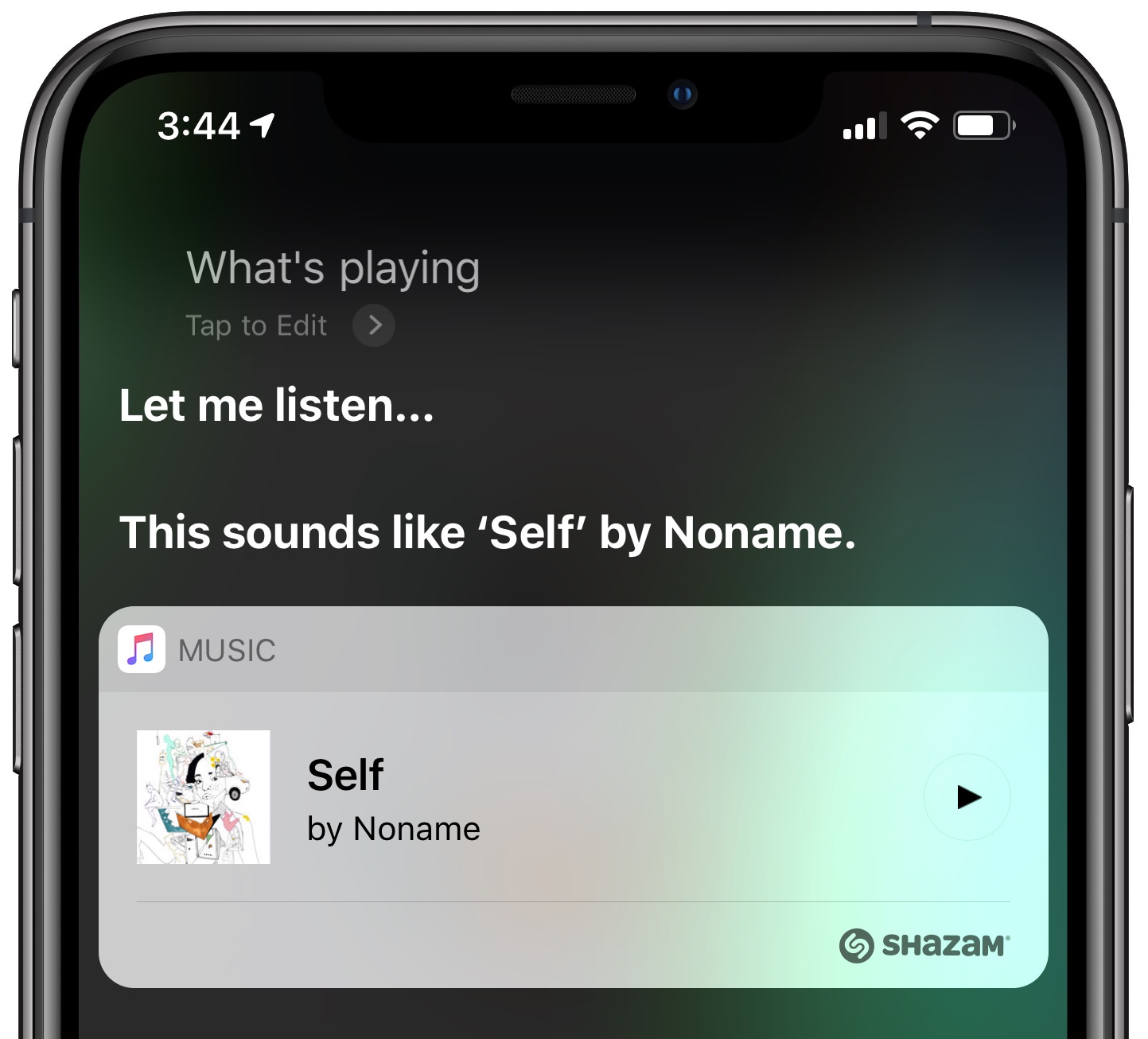

iOS 8 brachte Shazam zu Siri. Seitdem kann man die iOS-Sprachassistentin fragen: „Wie heißt dieser Song?“

Die aktuelle Shazam-App (universal; Laden) bringt keinerlei signifikanten Mehrwert gegenüber der bereits in iOS eingebauten Musikerkennung. Die Frage nach der 400-Millionen-Übernahme ist also gerechtfertigt. Daniel Eran Dilger leitet seine Erklärung nachvollziehbar her.

With or without Shazam, Apple could already build more sophisticated applications of visual recognition right into its iOS camera —potentially in the future, even in the background —allowing iOS devices to „see“ not just QR Codes but recognized posters, billboards, products and other objects, and suggest interactions with them, without manually launching the camera. But Shazam gives Apple a way to demonstrate the value of its AR and visual ML technologies, and to apply these in partnerships with global brands. […]

From that perspective, it’s pretty obvious why Apple bought Shazam. It wants to own a key component supporting AR experiences, as well as flesh out applications of visual recognition. This also explains why Apple is investing so much into the core A12 Bionic silicon used to interpret what camera sensors see, as well as the technologies supporting AR—combining motion sensing and visual recognition to create a model for graphics augmented into the raw camera view.